In this blog post, we’ll tell you all about the testing process of Cryowar – from manual to automation and beyond.

First Steps

We started thinking about automation tests at a somewhat late stage in the development – the core framework of the game was quite developed and we were testing all of the game features manually up until that point. As the time passed, we became more and more determined to implement a system where all the core functionalities of the game are to be verified via automation tests, due to the clear benefits that such a system would bring to the table:

- Cryowar is a real-time multiplayer competitive game, with a lot of interactions between the players and the environment. With this in mind, the scope for bugs is quite large and we couldn’t simply manually iterate through all of the scenarios, looking for a specific issue. Instead, we opted for tests that allow the game to play itself autonomously and log the specific cases where something was off the intended result;

- Additionally, we wanted to make sure that we continually evolve the game and respond to player feedback as quickly as we can – bug fixes and content patches need to be implemented as soon as possible, often in a matter of days. With every new update, there is a chance that the changes will break the game’s other features. Now, resorting to manual full-game tests every time there was an update of this sort would be impossible. However, having automation tests that verify specific features or interactions in the game made introducing new updates a much, much easier task;

- We’re a small team and we don’t have a lot of manual testers, so we’d like to ensure that we keep each new build tested within an easily manageable time-and-resources frame. And that’s when having an automation testing system really shines with its flexibility – when you’ve developed experience with it, you can have more than one point of testing, have the automation verify the results of each new commit, launch tests in out-of-office hours, and easily plan according to the results.

Automation in Unreal Engine 4

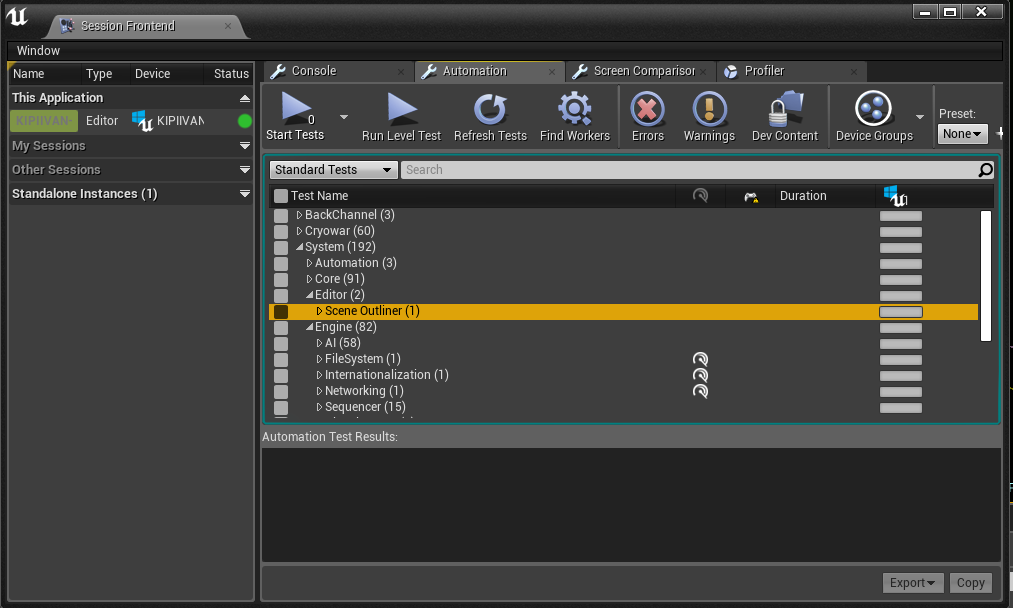

UE4’s Automation Testing System is already quite developed, so you can run tests directly through the Unreal Editor or, if you’d like to save your developers some time, you can run them through a custom build system, without the need to run the game at all.

First, we started by doing a few Unit Tests, just to poke around and see how the various components of our code come together. The unit tests are quick tests that are performed on the API level – usually, they check a specific operation on a small testable part of the code.

For example, a few of those would verify if our custom code containers and types work as intended (after all, we don’t want our in-game currency to become negative in any case!). Other tests can tell if we’re in the correct Game Mode, whether we have all the game objectives on a specific map or whether the exact number of players is inside the game or not.

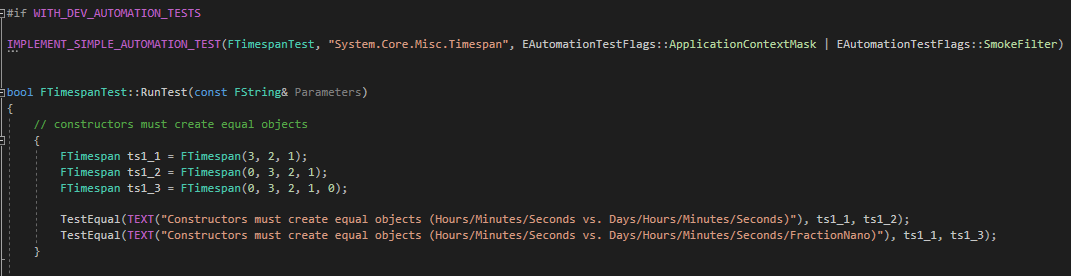

Here’s an example taken from UE4 – this test verifies that all of the timespan constructors return the same result.

Running with the Bots

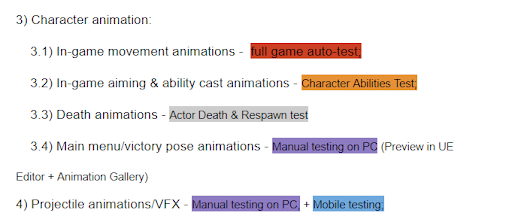

After this, we used the extensive feature list of the game to plan our following automation tests, so that they cover the core features as well as they could. Here’s a snippet of our feature list:

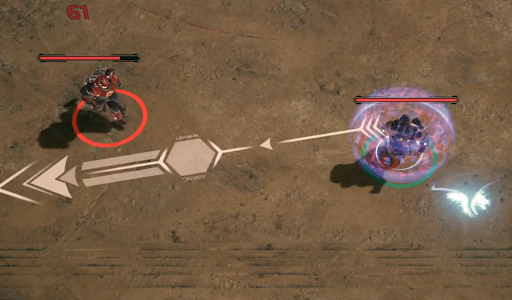

As Cryowar consists of short 3-minute matches, an obvious next step was to create an Integration test that plays the game by itself. Integration tests are the most complex type of tests in the Unreal Editor – usually, they involve creating a whole map that would try out a specific scenario and report its result. The AI was already in place, so I just had to create a new map, establish some rules, spawn the bots, and let them dish it out between themselves. We could then let the automation run freely in the background, essentially playing the game for hours. This allowed us to catch quite a lot of rare bugs that appear once every hundred games or so.

During the development process, we came back to this map, again and again, refining the logging and the collection of in-game statistics, so we could use them in all areas of the game.

Ability Chaos

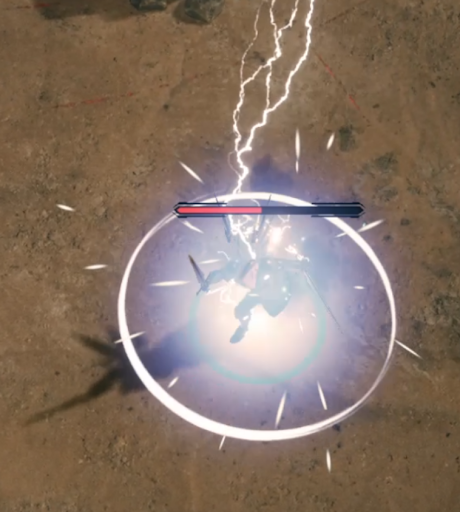

All playable characters in Cryowar have different abilities that interact with each other in a variety of ways. Moreover, each player controls one of two characters at a time (think of a tag-team fighting game) and some abilities affect the character in reserve.

Therefore, the next major type of automation test we built was one where we cycle through all of the character combinations and make those characters launch their abilities at each other. With the correct message logging in this test, we could instantly register when a specific set of abilities does not interact in the intended way, then pick the conflicting characters/abilities combination and further isolate the issue.

More specific auto-tests

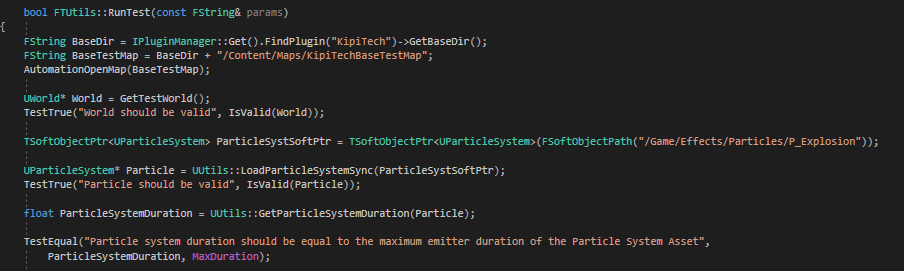

A lot of our following tests involved situations that were specific to the type of game we were making. For example, if you wish to create a slightly more complex test than a simple Unit test that does not involve playing the whole game, you may want to create a feature test. Its main advantage is that you can run multiple of them within a minimal map setup. Here’s an excerpt of a feature test that verifies that our methods for loading particle systems are working correctly:

A few particularly interesting examples of feature tests we have are the ones related to the character’s death. In Cryowar, where the player controls not one but two different characters in a tag-team, it happens quite often. This creates a lot of scenarios that need verification – for example, the player shouldn’t be able to switch out if one of his characters is dead. In some cases, a character manages to fire a projectile just before dying, so the properties of the projectile have to be calculated correctly, based on the late character’s stats. So we have various feature tests in place that try out such interactions before, at, and after the exact moment of the character’s demise.

Non-automated last thoughts on Automation

Keep in mind that manual testing still has its place – we, humans, are still much better than any machine when detecting visual and audio-related flaws within a game. Manually playing the game also allows for much more room for exploration which in turn detects problems that could not even be considered when preparing the automation tests. Therefore, the best approach to thoroughly testing a game still lies in carefully combining manual testing with automated, using the strengths of each one.

Thanks for taking the time to read this blog post! For any comments or questions, join us in our Discord server. You can also follow us on Facebook, Twitter, and Instagram. See you there!